Friction as a Feature: Building Trust in AI-Powered Workflows

AI can improve healthcare, but only if users trust it. Learn how UX design choices shape trust, safety, and adoption in patient-care tools.

.png)

AI has been all anyone can talk about for years, and everyday users, including physicians, nurses, and patients, are starting to adopt new AI tools.

But wait. Do you hear that? It’s the murmurs of concerned users. And rightfully so, because the implications if AI gets it wrong can be disastrous for a patient’s health outcomes. Those concerns aren’t just philosophical—they’re design challenges. Because if users don’t trust the AI, they’ll find ways to work around it, ignore it, or reject the tool altogether.

So what do you, as a product or UX person, do about this? How do you get users to trust it? How do you design this efficiency-creating AI feature and balance it with the required rigor of patient care? And how do you stop yourself from re-using the same old interfaces?

You’ve found the right blog. After working through AI features for many of our healthcare clients, we’ve assembled considerations that can help you break out of your initial ideas and consider something new.

Transparency beyond source links.

Transparency in AI is critical for trust. In healthcare, it’s uniquely complicated. The data lives across systems, some of it is sensitive, and users are often under time pressure. While linking out to the source data has become the default ‘transparency’ feature, it’s not the only option. When it comes to healthcare, source data may live in a completely different system that pulls users out of their workflow.

Think of a nurse filling out a prior authorization form. Are they going to want to jump out to their EHR just to verify a detail? No. But they will need to verify it because the risk of a denied PA later is enough to warrant the thorough check. Transparency isn’t always about linking out, but also about what you can pull in.

Starting ideas for transparency:

- Displaying confidence intervals helps users gauge the model's certainty about a recommendation.

- Showcasing conflicting data makes uncertainty visible.

- Pulling direct reference text removes paraphrasing mistakes.

- I-frames of source data keep users in the workflow while confirming information.

- Linking out to source data is the ultimate deep dive into the facts.

Add friction where it matters most.

When AI is creating or auto-populating data, healthcare users need to review it for accuracy. But how can you, as a designer, ensure that they’re actually reviewing it?

One of the worst and yet most prevalent measures of UX success in healthcare is the number of clicks. There’s a time and place to reduce clicks, but it should never be the only driver behind design decisions. Sometimes, the added friction of a click can be just what the doctor ordered. (Okay, okay. That was a bad pun. Don’t fire me.)

Map out the information your AI is generating. Where do you have high confidence in accuracy? Where is there a low impact on outcomes? These are areas where low- to no-friction interactions work wonders. But the lower your confidence level or the more important the data is to outcomes, the higher the friction should be. For example, if an AI suggests lab codes (low risk, high accuracy), auto-approve may be fine. But if it summarizes a clinical note that affects a prescription decision, add friction like an explicit review step.

Starting ideas for review interactions:

- Data that needs no review

- ‘Scroll to review’ a la Terms & Conditions

- Ability to reject input

- Checkbox to approve data individually

- Focused review workflow

- Bulk approve

Structure summaries for quick learning.

Another common application for AI in healthcare is data aggregation or summaries. For example, it can be time-consuming to piece together a general picture for a patient with multiple specialists and a long medical history. AI can help surface the key details so everyone involved stays informed.

While conversational interfaces like ChatGPT have a well-tested series of headlines and bullet points, it’s not the only way to showcase information for learning and context. Sometimes the last thing users want is to be hit with a wall of text.

Consider what users actually need from this information. Is it quick-hitting data points to make decisions? Is it a pattern-finding exercise? Is it simply to aid recall during context switching?

Starting ideas for data aggregation & learning:

- Nuggets of content that bring context into the existing workflow

- Templates of information based on the user's behaviors (i.e., what questions are they always asking for cases like this?)

- Bullet points a la ChatGPT

- Short paragraphs of text

- Progressive disclosures

- Chat interface

I can’t close a blog about UX design without citing the importance of knowing your users. A deep understanding of their priorities, workflows, stressors, and beliefs will make this whole process that much easier.

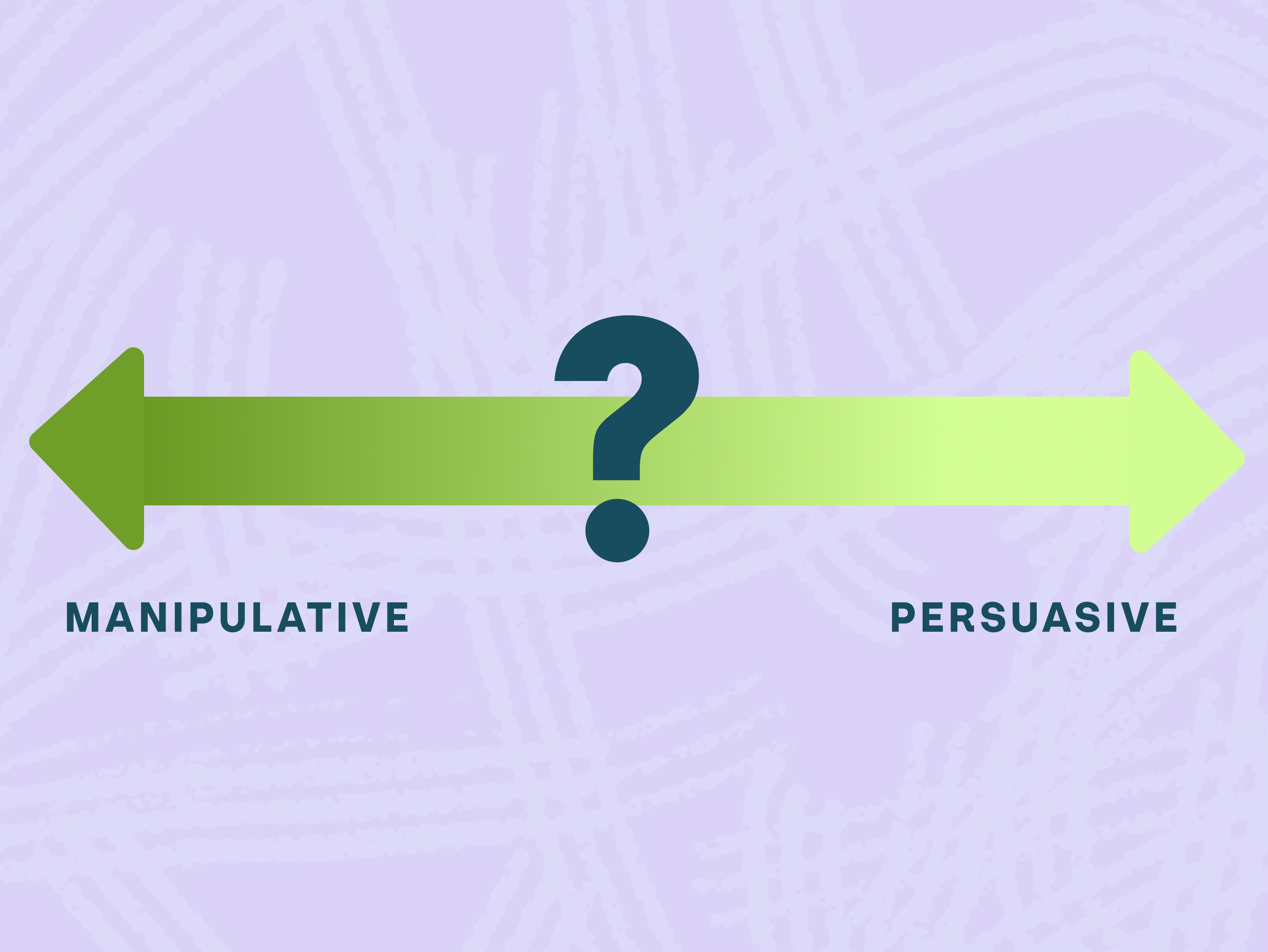

If you take away one thing from this, I hope it’s the need to explore and test a wide range of content & interactions. Create a continuum from an ultra-streamlined future state and work backwards by adding more friction. This process helps your team see where trust, verification, and user control should live, ultimately leading to safer, more trusted products.

Ready. Set. Go.

Check out our Ultimate Guide to UX Research & Product Design Services

Looking for insights for healthtech product leaders, delivered to your inbox every few weeks? Sign up for our newsletter.

Currently exploring

UX Mastery

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)